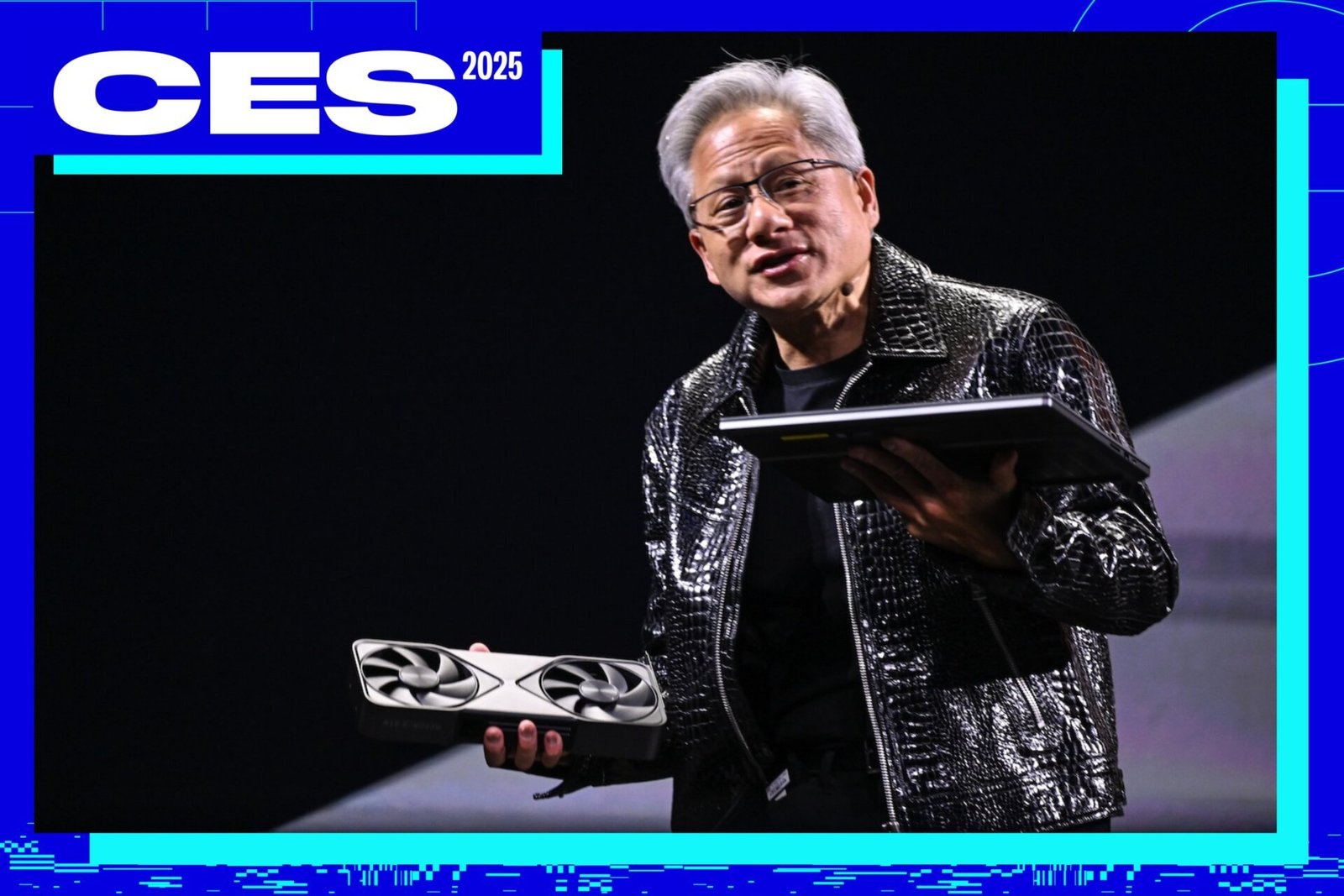

Nvidia is a “technology company,” not a “consumer” or “enterprise” company, CEO Jensen Huang emphasized. What exactly does he mean? Doesn’t Nvidia want consumers to spend hundreds or thousands of dollars on new, expensive RTX 50-series GPUs? Don’t they want more companies to buy their AI training chips? Nvidia is the kind of company that has many fingers in many pies. To hear Huang tell it, if the pie’s crust is the company’s chips, then AI is the filling.

“Our influence on technology will have an impact on the future of consumer platforms,” Huang — wearing his usual black jacket and the hot chest of AI hype — said in a Q&A with reporters a day after his outburst at the opening CES keynote. But how does a company like Nvidia fund it all those epic AI experiments? The H100 AI training chips making Nvidia a tech powerhouse in the past two years, with some stumble on the road. But Amazon and other companies are trying to create alternatives to break Nvidia’s monopoly. What happens when the competition cuts out the fun?

“We respond to customers wherever they are,” Huang said. Part of that is helping companies build “agent AI,” AKA multiple AI models that can complete complex tasks. That includes a number of AI toolkits developed to throw businesses a bone. While the H100 makes Nvidia big, and RTX keeps gamers coming back, it wants something new $3,000 “Project Digits” AI processing hub to open “a new universe” for those who can use it. Who will use it? Nvidia says it’s a tool for researchers, scientists, and maybe students—or at least those who stumble upon $3,000 in their cup of $1.50 instant ramen they ate for dinner for the fifth night in a row.

Nvidia surely you know about the RTX 5090’s 3,352 TOPS in AI performance. Afterward, Huang’s company dropped details on several software initiatives — both gaming and non-gaming related. None of his declarations are more confusing than the “universal foundation” AI models. These models should be able to train in real-life environments, which can be used for helping autonomous cars or robots navigate their environment. This is a lot of technology in the future, and Huang admits that he failed to better convey it to a crowd that mostly came to see the cool new GPUs.

“(The world foundation model) understands things like friction, inertia, grabbing, object presence, and elements, geometric and spatial understanding,” he said. “You know, the things that children know. They understand the physical world in a way that language models do not.”

Huang opened CES 2025 on January 6 with a keynote that filled the Michelob Ultra arena at the Mandalay Bay casino in Las Vegas. There will certainly be a large portion of gamers who will see the latest RTX 50-series cards in the flesh, but many will be there to see how a company as profitable as Nvidia continues. . RTX and Project Digits drew screams and shouts from the crowd. Spending half of his time talking about his foundational model of the world, the audience seemed less than enthusiastic.

It points out how awkward AI messaging can be, especially for a company that owes much of its popularity to the attentive population of PC gamers. There’s so much talk about AI that it’s easy to forget that Nvidia was in this game years before ChatGPT came on the scene. Nvidia’s in-game AI upscaling tech, DLSS, is almost six years old, improving all the time, and it is currently one of the best AI-upscalers in games, although limited by its exclusivity in Nvidia cards. This was well before the advent of generative AI. Now, Nvidia promises the Transformer models will greatly improve the development and reconstruction of the ray.

To top it off, the rumored multi-frame gen could deliver four times the performance for 50-series GPUs, at least if the game supports it. That’s a boon for those who can afford the new RTX 50 series. The RTX 5090 tops out at $2,000. The gamers who will benefit the most from frame gen are those who can only afford a low end GPU. Huang declined to offer any hints about an RTX 5050 or 5060, joking that “We announced four cards, and you want more?”

The world foundation model is just a prototype, like most of Nvidia’s new AI software that has been shown to the public. The real questions are, when will it be ready for primetime, and who will use it? Nvidia shows up oddball AI NPCs, in-game chatbots, AI nursesand the audio generator last year. This year, it wants to bloom with the foundation model of the world, in addition to many others AI “microservices,” including an amazing animated talking head that should serve as your PC’s constant assistant. Perhaps, some of them will remain. In cases where Nvidia hopes AI will replace nurses or audio engineers, we hope that doesn’t happen.

Huang considers Nvidia “a small company” with 32,000 employees worldwide. Yes, that’s not quite half the staff Meta has, but you can’t imagine it’s small in terms of market influence for AI training chips. Because of its position in the market, it has a great influence on the technology industry. The more people who use AI, the more people need to buy AI-specific GPUs, including any other AI software. If everyone buys themselves at-home AI processing chipthey don’t need to rely on external data centers and external chatbots. Nvidia, like every tech company, just needs to find a use for AI more than replacing all our work.